Introduction

When you buy a car, how can you evaluate its quality? Exactly. You can test-drive it. While driving the car, you focus on such things as the ease of steering, the smoothness of the ride and the responsiveness of the brakes. Only after making sure that your expectations for such characteristics are met, you’ll buy it.

The same applies to any software product, be it a website, a mobile app or a banking services program. An online store where you want to buy a present for your sweetheart may appear to be fine at first, but as you surf it more going to different pages, you may see mislabeled products and a checkout error may even impede your purchase.

This makes quality control (QC) so important in software development. Without QC, you cannot be certain that your piece of software works properly and meets customers’ expectations. And the cost of an error can be extremely high, both in terms of the company’s finances and reputation.

In 1999, a software failure caused NASA to lose a $125 million Mars climate orbiter.

In 2012, trying to get rid of its rival’s product, Google Maps, Apple rushed to introduce its own map app in iOS. As a result, in Apple’s Maps entire lakes, train stations, bridges and well-known landmarks had wrong locations, were mislabeled or even disappeared, which undermined consumer trust in the product and the company itself.

According to the research by CISQ, in 2020 poor quality software cost companies about $2.08 trillion in the US alone.

That is why at SaM Solutions we lay special emphasis on the quality of software we develop for our clients.

Quality Assurance (QA), Quality Control (QC) and Testing

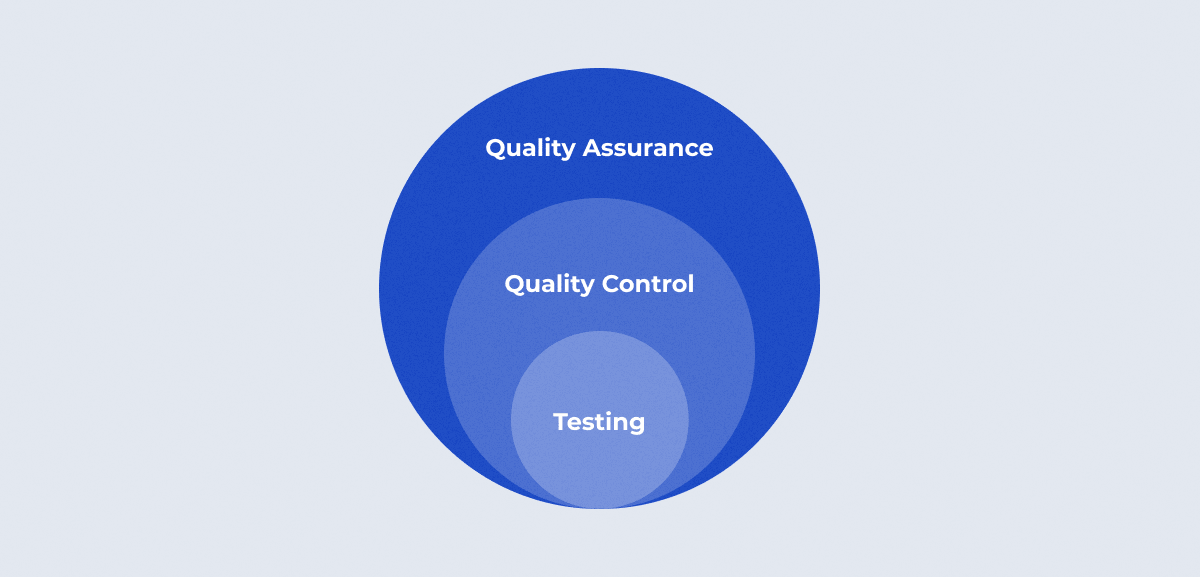

In colloquial speech, the term «quality control» (QC) is often confused and used interchangeably with «quality assurance» (QA). At SaM Solutions, we tend to take the approach that they are different concepts.

Of course, they have much in common. This follows at least from the word «quality», which is present in both terms and which represents the main goal of these activities. Specifically, both quality control and quality assurance are aimed at delivering the highest quality for the software product.

However, being the components of the same quality management activity, QC and QA focus on its different aspects. In particular, QC is intended to detect defects, while QA is all about defect prevention.

Quality assurance is a broader term. It is more concerned with the organizational aspects of quality management. As defined in ISO 9000, it is a «part of quality management focused on providing confidence that quality requirements will be fulfilled». It implies a proactive approach. The goal of QA is to improve development and testing processes so that defects do not occur during the software development.

Quality control, on the other hand, is a reactive process. It aims to identify defects after the product is developed. According to ISO 9000, QC is a «part of quality management focused on fulfilling quality requirements».

To put it simple, QC can be defined as the process to ensure that the software product meets customers’ expectations. Or, from another perspective, it is the process of evaluating a software item to find any differences between given input and expected output. Quality control mainly involves the testing process. Therefore, «software testing» can be used as a synonym to QC.

Differences between quality assurance (QA) and quality control (QC)

Comparison Table of QA vs QC

| Quality assurance (QA) | Quality control (QC) |

| Quality assurance is focused on ensuring that quality requirements will be met. | Quality control is focused on checking whether quality requirements have been fulfilled. |

| As a proactive process, quality assurance is aimed to prevent defects. | The goal of quality control is to identify and correct defects. Therefore, it is a reactive process. |

| Quality assurance is process-oriented, with focus on how software is developed. | Quality control is product-oriented, with focus on the final product, on its conformance to the quality requirements following from customers’ expectations. |

Stages of Software Testing Process

Essentially, the QC process can be divided into six key stages: analysis, choice of test strategy, planning, test design, execution and reporting. Depending on the specific software testing methodology (e.g. Agile, waterfall, V-model), these stages may be different.

Requirements analysis

The first step begins with an analysis of the requirements as to the software product. At this stage, software testers define (and understand) user expectations for a system being developed or modified.

This helps the team deliver the desired result and avoid any subsequent fixes. Without a correct road map, even the optimal technology stack, perfect code, or comprehensive testing will prove useless since the final software will not meet the needs of whomever it is intended for.

And to be helpful, requirements should be measurable and clear. For this purpose, they have to get written in a well understandable document, which is drawn up after long discussions with the team or product owner.

Test Strategy

As an outcome of the requirement analysis stage, a test strategy is derived. The Test Strategy establishes standards for testing processes and activities.

Some companies include the Test Strategy in the Test Plan, which is acceptable and common for smaller projects. Larger projects, on the other hand, have one Test Strategy document and a different number of Test Plans for each phase or level of testing.

Test Planning

A test plan is a dynamic document that is updated on an on-demand basis. It is essentially a blueprint for how the testing activity will be performed.

According to ISTQB, “Test Plan is a document describing the scope, approach, resources, and schedule of intended test activities.” The testing activity will be successful if the plan is detailed and comprehensive.

The test plan is created by the Test Lead or test manager, and it describes what to test, what not to test, how to test, when to test, and who will perform each of the tests. The test plan also contains the environment and tools that will be required, resource allocation, test techniques that the testers must follow, risks, and a contingency plan.

According to IEEE recommendations, a standard test plan content includes, among others, test items, software risk issues, features to be tested, features not to be tested, the approach, item pass/fail criteria (or) acceptance criteria, test deliverables, test tasks and the schedule.

Test Design

After preparing the test plan, the software testing team will be faced with the challenge that there are an infinite number of different tests that they could run, but there is not enough time to run all of them. Therefore, the testers should select a subset of tests. They must be small enough to execute and comprehensive at the same time to ensure that bugs are identified.

For this purpose, it is necessary to write specific test conditions and test cases on the basis of test objectives defined during the test planning. This process is called “test design.”

According to the ISTQB Glossary, test condition is an item or event of a component or system that could be verified by one or more test cases, e.g., a function, transaction, feature, quality attribute, or structural element.

Let’s say QC engineers need to test a new email application. In this case, one of test conditions could be the login functionality.

A test case describes the steps necessary to test any functionality or module, as well as the expected and actual results. For example, check results on entering invalid username and password in the email application being tested.

Test Execution

After the test design has been prepared, it’s time to execute it. During this phase, manual testers run the test cases that were created during the planning while test automation engineers use a selected framework (e.g., Selenium) to execute automated test scripts and generate test reports.

If the actual and expected results of the test case execution differ, the test engineer opens the bug (i.e. defect) in the bug tracking system (e.g. Jira, Bugzilla) so that it could be fixed by the developer.

After fixing, the defect goes back for testing where the tester checks it again. If the tester finds that it hasn’t been fixed they will reopen it. This cycle can go until the tester is satisfied that the bug has been resolved.

To increase the efficiency of quality control services, the QC team needs to decide which types of testing should be done manually and which should be done automatically. By dividing testing activities into manual and automated tests, testers can reduce the time and effort required to complete each task.

Types of testing to perform

Automated testing

Automated tests are run by a machine that executes a test script prepared during the test planning stage.

Automation usually applies to repetitive tasks as well as to other testing tasks that are difficult to complete manually.

Automated tests can be applied to various cases such as unit, API and regression testing. They can be executed repeatedly at any time of day.

By automating the testing, the QC team can save a significant amount of time and effort since all tests are run without human participation.

It is also possible to increase the test coverage, and improve testing accuracy. In testing a system manually, a test engineer can make a mistake, especially when the program contains thousands of lines of code. With automation, the QC team can avoid such human errors.

Manual checks

Manual testing, on the other hand, is performed by a human who executes the tests scripts clicking through the application. This is expensive and time-consuming since it requires people to set up an environment and execute the tests manually. Moreover, due to the human factor there is a high risk that some errors can be made. So it is less accurate than automated testing.

However, manual testing cannot be ignored or totally replaced by automation. It has its own advantages, thanks to which not all testing activities can be automated. A striking example here is exploratory testing where the whole process is usually unpredictable, involving a high amount of discovery and thinking as well as some prior knowledge of the application, and cannot be performed by a machine.

Therefore, the most efficient and comprehensive result is achieved where both techniques are used. Manual testing and automated testing complement each other.

Reporting

Following the test execution, the QC team delivers a test report with a summary of all performed testing activities and final test results. It is not only needed for the testers to give an account of how well their work has been done. From the test report, stakeholders can get an idea of the tested product quality, which allows them to make an informed decision on whether or not to release the software.

In particular, the test report usually specifies the following:

- project information (project title, product name, and its version);

- test objective;

- number of test cases executed;

- numbers of test cases passed;

- numbers of test cases failed;

- pass percentage;

- fail percentage;

- number of bugs found, fixed, reopened, deferred.

Verdict

In 2016, a 20-minute outage cost Amazon, the largest online retailer in North America, about $3.75 million.

History is replete with examples where software incidents resulted in similar damage. Nonetheless, testing is one of the most contentious issues in software development. Many product owners question its value as a separate process, putting their businesses and products at risk trying to save a few dollars.

Despite the widespread misconception that a tester’s sole responsibility is to find bugs, testing and quality assurance (QA) have a greater impact on the success of the final product. Test engineers add value to the software and ensure its excellent quality by having a thorough understanding of the client’s business and the product itself.

Furthermore, by leveraging their extensive product knowledge, testers can add value to the customer through additional services such as useful tips, guidelines, and product use manuals.